Sora - OpenAI’s Text to Video Tool

Discover Sora, Open AI's revolutionary text-to-video tool that is set to change multi-modal AI in 2024. Find out more about its capabilities, innovations, and future in this blog.

Imagine your ideas coming to life in the form of high-quality, realistic videos.

Sounds fantastic, right? Well, that's exactly what Sora Open AI can do for you!

Sora is a new AI-powered tool that can transform text prompts into videos that bring your imagination to life.

Key Takeaways

- Sora can create complex scenes with detailed backgrounds, multiple characters and accurately simulate emotions.

- With Sora, it is possible to generate multiple shots from a single video while maintaining the visual style and consistency of characters.

- Sora's advanced simulation of the physical world allows for the creation of complex and realistic scenes from simple textual prompts due to its deep understanding of language.

- Sora makes it easy to extend video clips while maintaining visual consistency, allowing you to create longer, more detailed videos without the need for additional footage.

What is Sora?

Sora is an open AI text-to-video model that creates realistic and imaginative scenes from text descriptions. Sora is one of the generative AI models that takes text descriptions as input and generates videos of varying quality and dimensions.

According to research shared by Open AI, Sora is a model that can transform static noise into different steps to create realistic videos.

The Sora tool is trained to work with images and videos, which are broken down into smaller pieces of data called "patches."

For instance, if you want to create a video of fashionable women walking along a Tokyo street, as shared by OpenAI, the resulting video would look like:

However, the complete description that was provided as a text prompt was:

A stylish woman walks down a lively street in Tokyo, surrounded by warm, glowing neon lights and animated city signage. She exudes confidence and ease as she strolls along, wearing a stunning ensemble consisting of a black leather jacket, a long red dress, black boots, and carrying a black purse.

She completes her look with sunglasses and bright red lipstick. The street is damp from recent rainfall, creating a reflective surface that multiplies the colorful lights. Many pedestrians walk around, adding to the bustling scene.

As simple as it sounds!

But what are the technologies behind it, and how exactly does it work? Let’s understand that in our next section.

Technologies Used in Sora

Sora AI uses a combination of advanced AI technology and generative AI tools to understand and create video content. This includes:

-

Diffusion Models

Diffusion models enable Sora to generate more precise videos and images by gradually adding and removing noise from visual data. This allows Sora to create coherent and clear visual content from a cluttered starting point.

-

Spacetime Patches

Sora breaks down the videos into small spacetime patches. These patches are the manageable video pieces that let Sora quickly analyze, learn, and handle videos of varied resolutions, durations, and aspect ratios.

-

Transformer Architecture

Sora uses an architecture similar to the transformers used in language processing for GPT models to handle video data. This allows Sora to comprehend both the spatial and temporal aspects of videos and to make sense of how scenes and objects change over time.

How Does Sora Work?

Sora utilizes advanced machine-learning techniques to create videos from text descriptions. Using Sora, the video creation process comprises a series of steps.

-

Receiving a Text Description

The process begins with a user providing a text prompt of the video's characters, style, setting, and other essential elements. This prompt could be anything like an old woman playing the piano.

-

Turning Videos Into Patches

Sora begins the process of breaking down an existing video into spacetime patches. This process involves the videos compressing into lower dimensional space and then breaking it into small and manageable patches that represent different elements and moments of the video.

-

Comprehending From Patches

By analyzing these created patches, Sora learns the dynamics and patterns of the various objects and scenes. It understands how an old woman can be infused into the scene and how piano keys might look to be shown as a realistic performance given by an old lady.

-

Generating New Patches

With the help of the given text description, Sora uses its understanding to create new patches that match the prompted scene. It imagines what the patches for "an old lady playing the piano" should look like while considering the old women, lighting, and the whole setup.

-

Assembling the Video

Finally, Sora combines these created patches to create a coherent video. This ensures that the motion is streamlined and smooth, and the natural transition of the scenes and the overall video matches the initial description both contextually and visually. Also, Sora automatically enhances the video quality by adding effects, music, and other elements to provide the user with a desired video outcome.

Witness Innovation in Action with Sora Open AI

Join thousands already benefiting from Sora Open AI. Transform text into captivating videos effortlessly. Get started now to unlock endless possibilities for your content.

In a nutshell, Sora AI is designed to make video generation easier by breaking down complex tasks into smaller, more manageable sections or patches. It then learns from vast amounts of video data and uses this knowledge to create new content based on textual descriptions. This innovative approach enables Sora AI to produce visually impressive videos that perfectly align with the user's creative vision.

Related Read: What is Conversational AI?

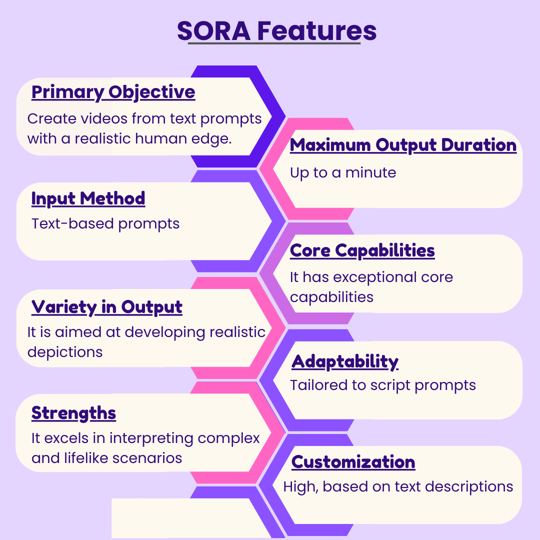

What are the Key Features of Sora?

Sora is a powerful tool that can bring still images to life with incredible precision and detail. It can also fill in missing frames in existing videos, making it a versatile tool for manipulating visual data.

Sora is built on advanced technologies like DALL·E and GPT models, and it uses the recaptioning technique from DALL·E 3, which generates highly descriptive captions for visual training data. Despite its impressive capabilities, let's take a closer look at some of the key features that have made Sora the matter of the moment.

Related Read: DALL-E 3 vs Midjourney: An AI Image Generator Comparison

Turning Visual Data Into Patches -

Sora has drawn inspiration from the Large Language Models to create visual patches that can be used to train generative models for various types of images and videos. These patches are highly effective and scalable.

To create the patches, the videos are first compressed into a lower latent space before being turned into patches at higher levels.

Spacetime Latent Patches -

Sora uses a compressed input video to extract spacetime patches that transform into a patch-based representation. This representation helps Sora create videos and images of different durations, resolutions, and aspect ratios.

Scaling Transformers for Video Generation -

Sora is a transformer that takes noisy patches as input and predicts clean patches. This diffusion mode has impressive scaling properties in different domains.

Sampling Flexibility -

Sora can create videos in any aspect ratio, including 1920x1080 and 1080x1920, making it easy to create content for a variety of devices.

Sora can create intricate scenes with several characters, precise motion types, and accurate details of the subject and the background. The model not only comprehends the user's prompt but also has an understanding of how these elements exist in the real world.

Language Understanding -

Text-to-video generation systems require a large number of videos with matching text captions. Sora employs the re-captioning technique from DALL-E 3 to the generated videos, resulting in a highly descriptive captioner model. This model is then used to create text captions for all of the videos.

Animating DALL-E Images -

Sora's video creation feature is amazing, even allowing image input. See an example of DALL-E2 and DALL-E3 image-based video generation below.

Connecting Videos -

Sora can be used to seamlessly interpolate between two input videos with different scenes and subject compositions, creating smooth transitions.

Extending Generated Videos -

Sora can extend videos either forward or backwards in time.

Examples of OpenAI Sora

Since Sora has become the talk of the town, Open AI has shared some mesmerizing examples of the videos or animations created using this fantastic text-to-video tool.

Sora Garde Gnome Example:

Prompt: A close-up view of a glass sphere that has a zen garden within it. There is a small dwarf in the sphere who is raking the zen garden and creating patterns in the sand.

Sora Vintage SUV Example:

Prompt: The camera follows behind a white vintage SUV with a black roof rack as it speeds up a steep dirt road surrounded by pine trees on a steep mountain slope, dust kicks up from its tyres, and the sunlight shines on the SUV as it speeds along the dirt road, casting a warm glow over the scene. The dirt road curves gently into the distance, with no other cars or vehicles in sight.

The trees on either side of the road are redwoods, with patches of greenery scattered throughout. The car is seen from the rear following the curve with ease, making it seem as if it is on a rugged drive through the rugged terrain. The dirt road itself is surrounded by steep hills and mountains, with a clear blue sky above with wispy clouds.

Sora Blooming Flower Example:

Prompt: A stop-motion animation of a flower growing out of the windowsill of a suburban house.

Sora Snow Dogs Example:

Prompt: A litter of golden retriever puppies playing in the snow. Their heads pop out of the snow, covered in.

PS: Collaborate with a top Generative AI development company to leverage the advantages of Sora in your business’s digital space.

Use Cases of Sora

Open AI Sora represents a significant advancement in the domain of AI and video generation. It is capable of demonstrating a deep understanding of language, visual perception, and physical dynamics.

Furthermore, it highlights the potential of AI to create engaging and immersive content for various purposes, including entertainment, education, art, and communication.

Some possible use cases of Sora are:

-

Advertising and Marketing

Digital marketers can easily create high-quality videos from text descriptions using Sora, allowing for quick testing of different concepts without needing extensive video production resources.

Prompt: A Chinese Lunar New Year celebration video with a Chinese Dragon.

-

Film Making and Entertainment

Traditional filmmaking methods can be time-consuming and costly, which limits experimentation and creative freedom. However, AI-driven tools like Sora accelerate project development, encouraging innovation and creativity in the industry. By accelerating the development cycle of projects, these tools promote a culture of innovation and creativity within the industry.

Prompt: An extreme close-up of an old man in his 60s with grey hair and a beard. He is sitting at a café in Paris, lost in deep thought, pondering the history of the universe. His gaze is fixed on people walking by as he remains mostly still. He is dressed in a wool suit coat with a button-down shirt, wearing a brown beret and glasses, giving him a very professorial appearance.

Towards the end of the scene, he offers a subtle, closed-mouth smile as if he has discovered the answer to the mystery of life. The lighting in the scene is very cinematic, with a golden glow emanating from the Parisian streets in the background, and the depth of field is like that of cinematic 35mm film.

-

Virtual Reality and Augmented Reality

Sora's VR and AR content generator allows for quick environment and scenario prototyping based on textual descriptions.

Prompt: Historical footage of California during the Gold Rush

-

Game Development

Developers can create game trailers using Sora from written descriptions, visualizing game worlds and characters early on.

Prompt: A giant, towering cloud in the shape of a man looms over the earth. The cloud man shoots lightning bolts down to the earth.

Want to Revolutionize your Digital Presence with this Open AI Tool?

Get in touch with the Gen AI pioneers and leverage the benefits of the Sora AI video generator.

The Current Impact of Sora's Approach

Sora AI uses the diffusion transformer architecture to create highly realistic and complex physical world simulations. This is achieved through extensive training on video data, as well as the incorporation of physical rules into the model. It's a similar process to how ChatGPT-4 generates code by learning from a vast database of programming languages.

Future Perspective of Sora

OpenAI is currently focused on enhancing Sora's accessibility, user-friendliness, and functionality. In the near future, Sora has the potential to become a groundbreaking tool that revolutionizes the way video content is produced in a wide range of fields, including education, entertainment, business, and science.

Pro-tip: Acquire Generative AI development services from a reliable Gen-AI partner to stay ahead of the competition.

Also Read: Ways Generative AI Will Improve the Customer Experience

Bottom Line

Sora OpenAI is an advanced solution that can transform plain text into visually stunning videos. With a clear understanding of how it works, anyone can leverage its capabilities to their benefit.

OpenAI has not yet announced a specific launch date for Sora, but this AI tool is generating excitement across various industries for its potential to revolutionize video content creation.

Frequently Asked Questions

Have a question in mind? We are here to answer. If you don’t see your question here, drop us a line at our contact page.

What is Sora AI?

![]()

What are the Advantages of Using Sora?

![]()

Sora AI offers many benefits, including the ability to produce impressive results that are difficult to achieve through other means. Additionally, attempting to achieve these results without Sora's help would require a considerable amount of time and skill. Some of the advantages of using Sora AI include:

- Provides Versatile Features: Sora is capable of handling complex scenes with specific motions, backgrounds, and various types of characters, including fantastic creatures.

- Understand Language Deeply: Sora understands the prompt requirements accurately and can handle lengthy prompts up to 135 words.

- Accepts Multiple Inputs: Sora goes beyond text-to-video by accepting images and text prompts to generate high-quality videos.

- Generates High-Quality Outputs: Unlike other text-to-video AI tools, Sora creates videos with varying durations, high resolution, and different aspect ratios.

When will OpenAI’s Sora Launch?

![]()

There is currently no information on when Sora will be released to the public. Based on previous OpenAI releases, it is possible that a version of it may become available to a limited number of people at some point in 2024.

How does the Sora model work?

![]()

Sora is a diffusion model. This means that each frame of the video initially contains static noise. Machine learning is used to transform the images into something that resembles the description in the prompt.