9 Best Open-Source LLMs to Watch Out For in 2024

Discover the top open-source LLMs transforming language technology. This blog will give you crucial insights into the top open-source LLMs in 2024.

.jpg?width=670&height=445&name=Open-Source%20LLMs%20to%20Watch%20Out%20For%20in%202024%20(1).jpg)

Large language models have become the cornerstones of this rapidly evolving AI world, propelling innovations and changing the way we interact with technology.

As these models continue to evolve, there's an increasing emphasis on adopting these models.

According to the grandviewresearch, the growing number of LLM models used and the associated market size will surprise you.

Open-source models play a crucial role in offering innovation while providing developers, enthusiasts, and developers alike with the opportunity to dig into their intricacies and fine-tune them to perform specific tasks.

We'll explore some top open-source LLMs that are reaching new market prospects and bringing their unique capabilities and strengths to the table.

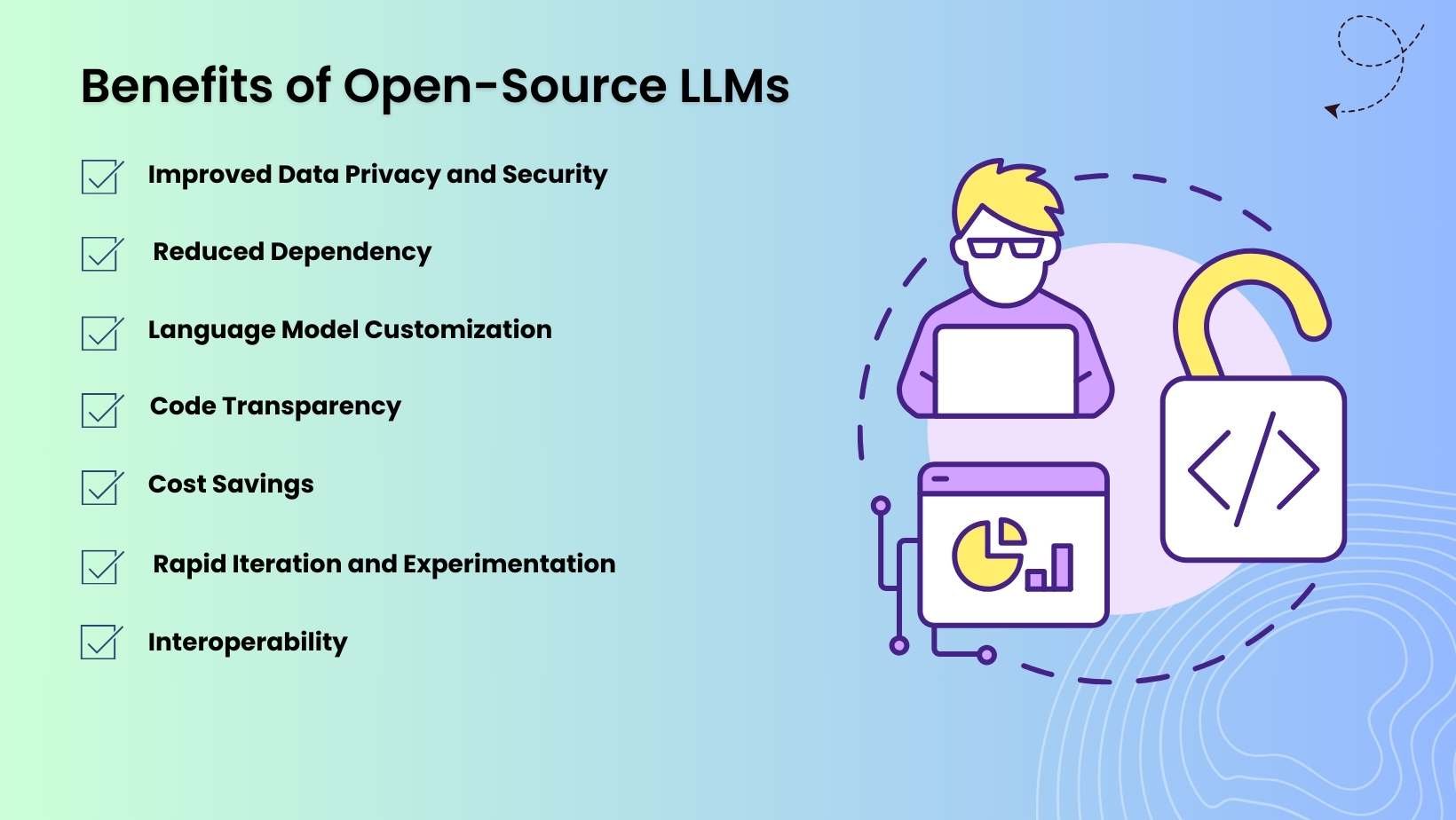

Benefits of Open-Source LLMs

In today's era, where Google Bard and ChatGPT monitor the digital landscape of large language models, the realm of open-source LLMs for business continues to thrive. With its advanced technology and abundant resources, LLM is leading the way in AI applications. Let's examine a few advantages of open-source LLM.

-

Improved Data Privacy and Security

Open-source LLMs enable organizations to deploy models on their infrastructure while improving data privacy and security.

-

Reduced Dependency

Open-source LLM models help organizations diminish their reliance on a single party, enhancing flexibility.

-

Language Model Customization

Open-source LLMs provide greater flexibility for customization to specific industry requirements, enabling organizations to fine-tune their models according to their unique requirements.

-

Code Transparency

Open-source LLM models are transparent, allowing businesses to perform comprehensive validation and inspection of the model's functionality to comply with industry standards.

-

Cost Savings

While eliminating the licensing fees, open-source LLMs provide a cost-effective solution for startups and enterprises.

-

Rapid Iteration and Experimentation

With open-source LLMs, businesses can experiment and iterate quickly, testing changes and rolling out updates quickly.

-

Interoperability

Easy integrations are made possible by the wide range of technologies and systems that open-source LLMs for business are made to work with.

Elevate Your Projects with Cutting-Edge Language Models

Take the first step towards leveraging the potential of open-source LLMs for your projects.

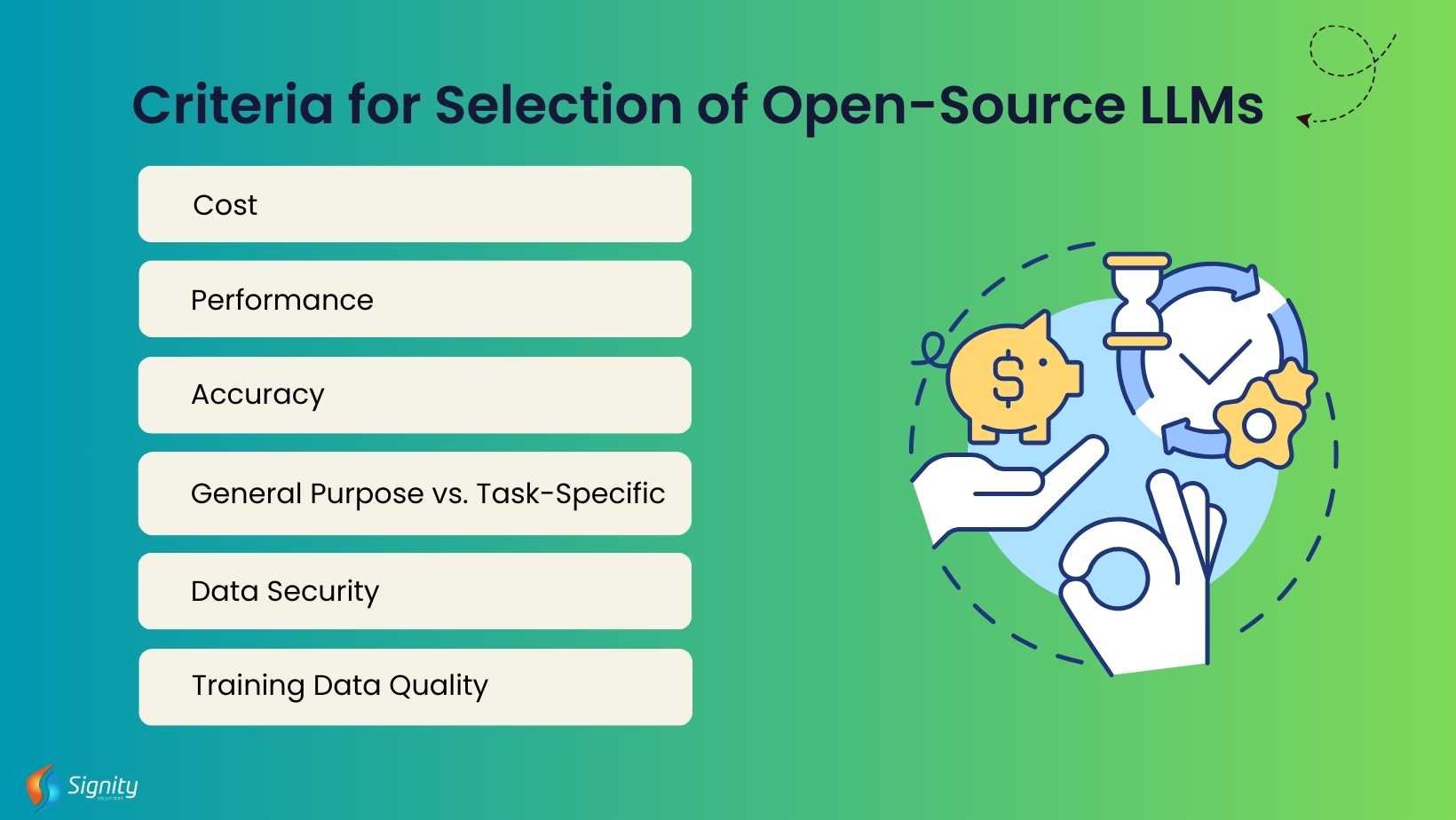

Criteria for Selection of Open-Source LLMs

You may find a comprehensive set of open-source LLMs online, but how do you choose one from those ample choices?

Well, this also requires narrowing down the selection based on specific criteria. The section below provides the key factors to consider before making a selection.

1. Cost

Cost is one of the most significant factors to be considered. You must be thinking why we are talking about the price when we are considering open source models. Well, since the LLMs are open, you do not have to pay for the model itself, but you need to think about the other costs associated with it, like resources required, hosting, and training. The more complex the chosen LLM, the more likely it will cost you.

2. Performance

The LLM's performance is measured in the parameters like coherence, context comprehension, and language fluency. Therefore, if these parameters, when considered, perform well, the chosen LLM would be better and more effective.

3. Accuracy

Evaluating accuracy is one of the crucial factors. You must compare the different LLMs for the tasks you need them to perform and select one based on the accuracy evaluation.

4. General Purpose vs. Task-Specific

Before deciding upon one, analyze whether you need an LLM that resolves specific use cases only or one that covers a comprehensive task spectrum.

|

Pro-Tip: You can opt for a domain-specific LLM if you are looking to resolve domain-specific tasks; otherwise, general-purpose LLMs also provide greater efficiency. |

5. Data Security

Data security is one of the critical aspects. When evaluating this, RAG would be useful, as it allows you to control data access using document-level security and restrict permissions to specific data.

6. Training Data Quality

It is true that if the quality of training data is compromised, the outcomes will also be affected. Therefore, evaluate the data each LLM uses and choose one with the fine data training quality.

Also, consider asking yourself the following questions before making a selection.

- What do you want to do when choosing open-source LLMs?

- Why do you even require a LLM?

- How much accuracy do you require?

- How much investment can you make while selecting one?

- Can you achieve your goals with LLM integration?

- Analyze the different LLM use cases and applications.

Choose open-source LLMs wisely or partner with a reliable company to get things done for you.

Elevate Your Projects with Top LLMs

Elevate the performance of your projects with top open-source language models, driving innovation and efficiency.

Top Open-Source LLMs for 2024

While the options of open-source LLMs are ample, there are a few top picks that are catching attention.

The below graph shows the trends in the number of LLMs introduced over the years.

However, which one to choose is still a question. Let us check out the top 13 open-source LLMs that are popular for driving businesses forward more effectively.

1. GPT-NeoX-20B

GPT—NeoX-20B is an open-source large language model developed by EleutherAI. With only a few significant exceptions, its architecture largely adheres to GPT-3, making it an autoregressive transformer decoder model.

It was trained using the GPT-NeoX library, using information from The Pile, an 800GB open-source dataset hosted by The Eye.

Ideal for?

The GPT-NeoX-20B is a perfect LLM for medium-sized or large businesses, like marketing agencies and media companies, that need to generate advanced content.

The model has been trained on 20 billion parameters, as indicated by the "20B" in its name. The model can comprehend and produce text with a high degree of complexity and nuance because of its large parameter count.

Due to its complete open-source nature and lower cost of ownership compared to similar models of equivalent quality and size, GPT-NeoX-20B is more accessible to researchers, tech founders, and developers.

Key Features of GPT-NeoX-20B

- On a performance-adjusted basis, GPT-NeoX-20B is less expensive to implement than GPT-3.

- Known for its sophisticated natural language processing abilities, the GPT-NeoX-20B model belongs to the GPT series.

- Users can adjust the model to produce text outputs that adhere to particular specifications or stylistic preferences.

- The model leverages contextual information to generate text that is coherent and contextually relevant.

- GPT-NeoX-20B excels in natural language tasks such as sentiment analysis, text classification, question-answering, and language translation.

- GPT-NeoX-20B is compatible with various programming languages and frameworks for easy integration into projects.

2. GPT-J-6b

EleutherAI also developed the GPT-J-6b model. It is a generative pre-trained transformer model that creates human-like prompt text. It uses the GPT-J model with 6 billion trainable parameters.

This model, however, is not appropriate for translating or producing text in non-English languages because it was trained exclusively on English language data.

Ideal for?

GPT-J-6b is the perfect choice for start-ups and medium-sized enterprises seeking a suitable balance between resource consumption and performance because of its comparatively smaller size and simplicity of use.

Key Features of GPT-J-6B

- GPT-J-6B is skilled at natural language understanding, allowing it to perform tasks like text classification, sentiment analysis, question answering, and language translation.

- Integrating GPT-J-6B into projects is easy because of its compatibility with various programming languages and frameworks.

- Like other language models, GPT-J-6B is capable of producing text in response to a prompt.

- GPT-J-6B was trained using sequence-to-sequence training to generate text based on a previous sequence.

3. LLaMA 2

LLaMA 2 is an acronym for Large Language Model Meta AI. It is an advanced AI model developed by Microsoft and Meta AI.

LLaMA 2 can also understand and generate text by understanding images, making it suitable for multimodal tasks. This LLM comes in three different sizes and was trained on 7, 13, and 70 billion parameters.

It was trained on a varied range of image data and interesting text, and its architecture integrates concepts from LLaMA 1.

Ideal for?

LLaMA 2 is an excellent option for educational developers and researchers who want to leverage the extensive language models. It can run on consumer-grade computers.

Key Features of LLaMA 2

- LLaMA 2 proficiently understands the conversational nuances in context to provide accurate responses.

- The model can adjust its style and tone based on the user preferences.

- LLaMA 2 provides well-researched insights on varied subjects and enriches interactions with easy access to diversified resources.

- The model seamlessly integrates the text with other media to describe, interpret, and create content across multiple modalities.

- The LLM promotes safe content development by minimizing harmful or biased outputs.

Recommended Read: What is LLM & How to Build Your Own Large Language Models?

4. Bard Nano

Google developed Bard Nano LLM. The model is lightweight and operates on local devices, making it suitable for edge computing scenarios.

Google's AI-powered chatbot, Bard, was initially announced in 2023 but was renamed Gemini in 2024, possibly to divert attention from its moniker and focus on the success of the Gemini LLM.

The Bard Nano uses deep learning algorithms to comprehend and create natural language. The model can be trained on a wide range of text data.

Bard nano includes pre-trained models for multiple languages and can easily be fine-tuned for particular tasks. The model can be used in a variety of applications, such as voice assistants, translation tools, and chatbots.

Key Features of Bard Nano

- One notable enhancement of the Gemini-Bard integration is that it allows Bard to produce higher-quality, more accurate responses by providing a clearer understanding of the user's intent.

- Bard can easily handle all types of media, including audio, video, and images, because of Gemini's multimodality, which improves user experience.

- Future human-AI interaction will be rich and nuanced due to the integration of Gemini and Bard.

5. Mistral AI

Mistral AI is a fundamental model that uses customized techniques for data processing, training, and tuning. It is a high-performance, efficient model that is open-source and available under the Apache 2.0 license for use in real-world applications.

Its foundation is the transformer architecture, a kind of neural network that excels at machine translation and text summarization.

The model performs exceptionally well on various benchmarks, such as math, reasoning, and code generation.

Mistral provides several models with a fully permissive license for free use. The most sophisticated are the Mistral 7B transformer model, the Mistral 8x7B open model, and a smaller, English-speaking version with an 8K content capacity.

Ideal for?

The Mistral AI is well suited for start-ups and midsize businesses seeking extraordinary capabilities and greater efficiency in LLMs.

Key Features of Mistral AI

- It is renowned for offering current, accurate information in a conversational style, which makes it an important tool for preserving the accuracy and quality of content.

- Its advanced AI models empower users to solve complex challenges.

- One of Mistral AI's standout features is that it offers users a higher degree of customization.

- Mistral AI is a beneficial tool for overcoming language barriers and facilitating communication in different languages.

- Mistral AI has the capability to improve and streamline interactions through the use of intelligent chatbots.

6. MPT-7B

A GPT-style, decoder-only transformer model, MPT-7B stands for MosaicML Pretrained Transformer. This model offers architectural modifications that improve training stability and layer implementations that are optimized for performance.

MPT-7B is an open-source tool useful for commercial applications. It can substantially impact predictive analytics and corporate and organizational decision-making procedures.

Key Features of MPT-7B

- It is an invaluable tool for companies due to its commercial license.

- With one trillion tokens, the model boasts a large dataset for training.

- Fast training and inference are key features of the model, which guarantee prompt output.

- With quality comparable to LLaMA-7B, MPT-7B has proven superior to other open-source models in the 7B-20B range.

- Open-source training code for MPT-7B is effective and transparent, making it easier to use.

- The MPT-7B is built to handle incredibly long inputs without compromising on performance.

7. BLOOM

With an impressive 176 parameters, BLOOM is a transform LLM that is limited to decoders only. It can be tuned to carry out specific tasks like summarization, text creation, semantic search, classification, and embeddings. Originally, it was designed to generate text in response to prompts.

The model's training set included hundreds of sources in 46 different languages, which makes it a great choice for multilingual output and language translation.

Ideal for?

BLOOM LLM is ideal for larger businesses that target a global audience that needs multilingual support.

Key Features of BLOOM

- The BLOOM LLM is equipped with a versatile laser module that is suitable for a wide range of industries and applications.

- This laser module offers high-precision capabilities for tasks requiring accuracy and fine detail.

- The BLOOM LLM is compact, portable, and versatile, making it easy to integrate into existing systems or use in various settings.

- The laser module offers a user-friendly interface for easy control and monitoring of laser functions.

- The BLOOM LLM prioritizes user safety and industry compliance through its built-in safety features.

- BLOOM LLM offers customized laser features and integration with various control systems to meet specific user requirements.

8. OPT-175B

OPT-175B is the first LLM with 175 billion parameters, created by Meta AI research.

Using a dataset of 180 billion tokens, this LLM required only 1/7th of the carbon footprint of GPT-3 during training, and it demonstrated performance comparable to GPT-3.

Key Features of OPT-175B

- Safety features are integral to the OPT-175B, guaranteeing safe and risk-free operation.

- Because of its small size, the OPT-175B is easy to install and operate in different environments.

- The robust construction of the OPT-175B guarantees dependability and durability even in demanding environments.

- It keeps up with the latest innovations in its industry by utilizing cutting-edge technology to guarantee smooth and productive operation.

- This LLM intends to capture the performance and scale of the GPT-3 class of models.

9. XGen-7B

The XGen-7B LLM model contains 7 billion parameters, which means it is a large model. Models with a higher number of parameters, such as those with 13 billion tokens, require high-end CPUs, GPUs, RAM, and storage.

One of XGen-7 B's main features is its 8K context window. A larger context window means that you can provide more context when generating output from the model.

This allows for longer responses. The 8K context window is the total size of the input and output text that you provide to the model.

Key Features of XGen-7B

- XGen-7B can answer multiple-choice questions from different areas because of its Massive Multitask Language Understanding feature.

- XGen-7B processes up to 8,000 tokens, perfect for tasks needing a deeper understanding of longer narratives.

- The model has been trained on a variety of datasets, including instructional content, providing it with a nuanced understanding of instructions.

The Future of LLMs

With the ongoing and remarkable progress of the LLMs, the exciting opportunities it is offering now will continue to thrive in the future as well. However, when talking about the future of large language models, future research will focus on:

- Fine-Tuning and Specialization

- Multimodal Capabilities

- More Customization

- Multilingual Competency

- Working Together with Different AI Models

- Edge Computing Integration

- Training Techniques

- Higher Security and Robustness

Conclusion

Large Language Model Development is at the forefront of these advancements, driving innovation in natural language processing. As open-source LLMs like BERT, CodeGen, Llama 2, Mistral, bloom, and XGen-7B lead the way, they pave the path for future developments in the field.

The ongoing advancements in the LLM field are increasingly making their way to the future and will certainly continue to thrive. With this, we can expect some more powerful and ethical models on the horizon.

Discover innovation in your business with the capability of open-source LLMs.

Frequently Asked Questions

Have a question in mind? We are here to answer. If you don’t see your question here, drop us a line at our contact page.

What are Open-Source LLMs?

![]()

An open-source LLM is available for free and can be tailored to meet business needs. Companies can utilize these open-source LLMs to manage their requirements without incurring any licensing fees. This involves deploying the LLM to their own infrastructure and customizing it to handle their specific requirements.

What are the challenges of open-source large language models?

![]()

One of the major issues with open-source large language models is the quality of their output. These models are prone to generating results that can be incoherent or factually incorrect in certain contexts. Therefore, incorporating high-quality training data can lead to better outcomes. That's why it's essential to evaluate the quality of the training data before selecting an open-source LLM.

%201-1.webp?width=148&height=74&name=our%20work%20(2)%201-1.webp)

.png?width=344&height=101&name=Mask%20group%20(5).png)

.jpg?width=352&name=Top%20Large%20Language%20Models%20(LLMs).jpg)