A Comprehensive Guide to LLM Leaderboards

LLM leaderboards are useful tools for comparing and choosing large language models (LLMs) based on their performance in different tests. They help developers and businesses find the best AI models and keep track of progress in the field. This blog provides a deep overview of the top LLM leaderboards and insights into their capabilities.

The most challenging thing about custom AI models for businesses is choosing the right LLM. This can actually affect the overall outcome.

But the question here is how to make the selection easy. Well, it can be done quickly by evaluating the Large Language Model leaderboards.

LLM leaderboards are the scorecards used to analyze machine learning models in natural language processing based on various evaluation benchmarks. These leaderboards compare the leading language models to identify their strengths and weaknesses. This blog provides in-depth details about the top LLM leaderboards. Let us begin.

Generate

Key Takeaways

Generating...

Generate

Key Takeaways

Generating...

- LLM leaderboards simplify model selection by offering benchmark-based comparisons of top-performing large language models across various use cases.

- Popular leaderboards like LMSYS, Hugging Face, and MTEB evaluate LLMs on diverse tasks like conversation, coding, and function calling.

- Each leaderboard focuses on different metrics such as accuracy, reasoning, speed, cost-efficiency, and real-world applicability, helping align model choices with business needs.

- While leaderboards guide model evaluation, implementation success depends on having the right expertise to customize and deploy LLMs effectively for your specific goals.

Top 7 Useful LLM Leaderboards for Large Language Model Selection

LLM leaderboards rank language models based on different benchmarks. They help track various language models and compare their performance. These leaderboards are particularly useful for deciding which models to use.

1. LMSYS Chatbot Arena Leaderboard

The LMSYS Chatbot Arena leaderboard evaluates chat models and chat-based interfaces in conversational AI, emphasizing the importance of chat functionality for user interaction and engagement. It measures how well they manage complex and subtle conversations. This helps developers improve human-computer interaction using natural language processing.

The chatbot arena uses a detailed assessment system that combines human preference votes and Elo ranking method to evaluate large language models.

It uses a “blind” side-by-side comparison. Users submit prompts and vote for the better response from two randomly selected and anonymous models. The system uses votes to create Elo ratings, which rank the models.

The LMSYS Chatbot Arena leaderboard combines the following to provide a comprehensive evaluation:

1. Human Preference: The Chatbot Arena Elo rating system uses real-world user feedback and preferences for conversational tasks.

2. Automated Evaluation: Benchmarks such as AAI and MMLU-Pro provide a clear and comprehensive evaluation of knowledge and abilities in various domains.

Key Benchmarks for LLM Evaluation

Blind Testing: The blind nature of the comparisons reduces bias and enables a more objective evaluation of the models' capabilities.

Elo Rating System: The collected votes are used to calculate Elo ratings for each model. This system assigns a score to each LLM based on user votes in pairwise comparisons.

Dynamic Ranking: The leaderboard ranks models based on pairwise comparisons. Rankings can change as new models are added and more votes are cast.

Focus on User Preference: The LMSYS Arena primarily focuses on evaluating LLMs based on overall user preference. This means it also emphasizes human judgment.

Purpose and benefits of LMSYS Chatbot Arena Leaderboard

The LMSYS Chatbot Arena Leaderboard has several goals and advantages.

-

LMSYS leaderboard offers a clear and ongoing assessment of language models (LLMs) in conversations

-

Arena allows for comparisons between the performance of different LLMs.

-

It helps researchers and developers identify trends and areas for improvement in LLMs.

-

The Arena leaderboard helps businesses select the right LLMs for their specific needs.

2. Hugging Face Open LLM Leaderboard

The Hugging Face open LLM leaderboard enhances transparency and fosters open collaboration in language model evaluation. It supports a vast range of tasks and datasets, while also encouraging contributions from developers. Users can add new models, report errors, or supplement information to the leaderboard, thereby enhancing the quality and accuracy of the data. This scenario promotes diversity in model entry and continuous improvement in benchmarking methods.

Hugging Face Evaluation Benchmarks

Hugging Face open-source LLM leaderboard uses seven widely accepted benchmarks for assessing LLMs.

- The AI2 Reasoning Challenge (ARC) tests the language model's ability to answer grade-school science questions that require both specific knowledge and reasoning skills.

- HellaSwag evaluates the commonsense understanding and inference of situations that require models to choose the most plausible ending to a given scenario.

- Massive Multitask Language Understanding (MMLU) is a comprehensive benchmark that covers 57 tasks across multiple domains, including mathematics, law, computer science, and more.

- TruthfulQA measures the model's ability to provide truthful answers and resist generating misinformation.

- IFEval challenges the models to respond to prompts containing specific instructions.

- Winogrande evaluates commonsense reasoning by requiring models to detect the antecedents of pronouns in ambiguous sentences accurately.

- Grade School Math 8K (GSM8K) assesses the model's ability to understand, reason about, and solve multi-step problems.

Key Features of Hugging Face LLM Leaderboard

-

Transparency through open-source access

-

A comprehensive set of performance metrics

-

Regular updates that reflect the latest advancements in model development

3. CanAiCode LLM Leaderboard

The CanAiCode Leaderboard evaluates and ranks AI models based on their coding capabilities. This leaderboard uses human-created interview questions that are required to be answered by the AI models. It evaluates the model’s ability to understand, generate code, and solve programming problems.

The leaderboard also considers the number of tokens generated or processed by each model, as this impacts both performance and cost in code generation tasks.

Based on the recent data, below are the top LLMs results on the CanAiCode Leaderboard:

-

Google Gemini 2.5 Pro scores highest with 0.77 in AI-assisted code.

-

Anthropic Claude 4 Opus achieves a score of 0.72.

-

Claude Sonnet 4 scores 0.71.

Also Read: Top 15 Large Language Models in 2025

Evaluation Benchmarks for CanAiCode Leaderboard

- Human-authored interview questions: These questions are designed to be relevant to real-world scenarios and cover a range of difficulty levels, from junior to senior-level development.

- AI-generated solutions: AI models are tasked with solving these interview questions, and their code is automatically tested for correctness and efficiency.

- Realistic Environment: A Docker-based sandbox is used to validate the untrusted Python and NodeJS code generated by the models.

Ready to Build with the right LLM?

Our AI experts can help you implement, fine-tune, and deploy high-performing LLMs tailored to your unique business needs.

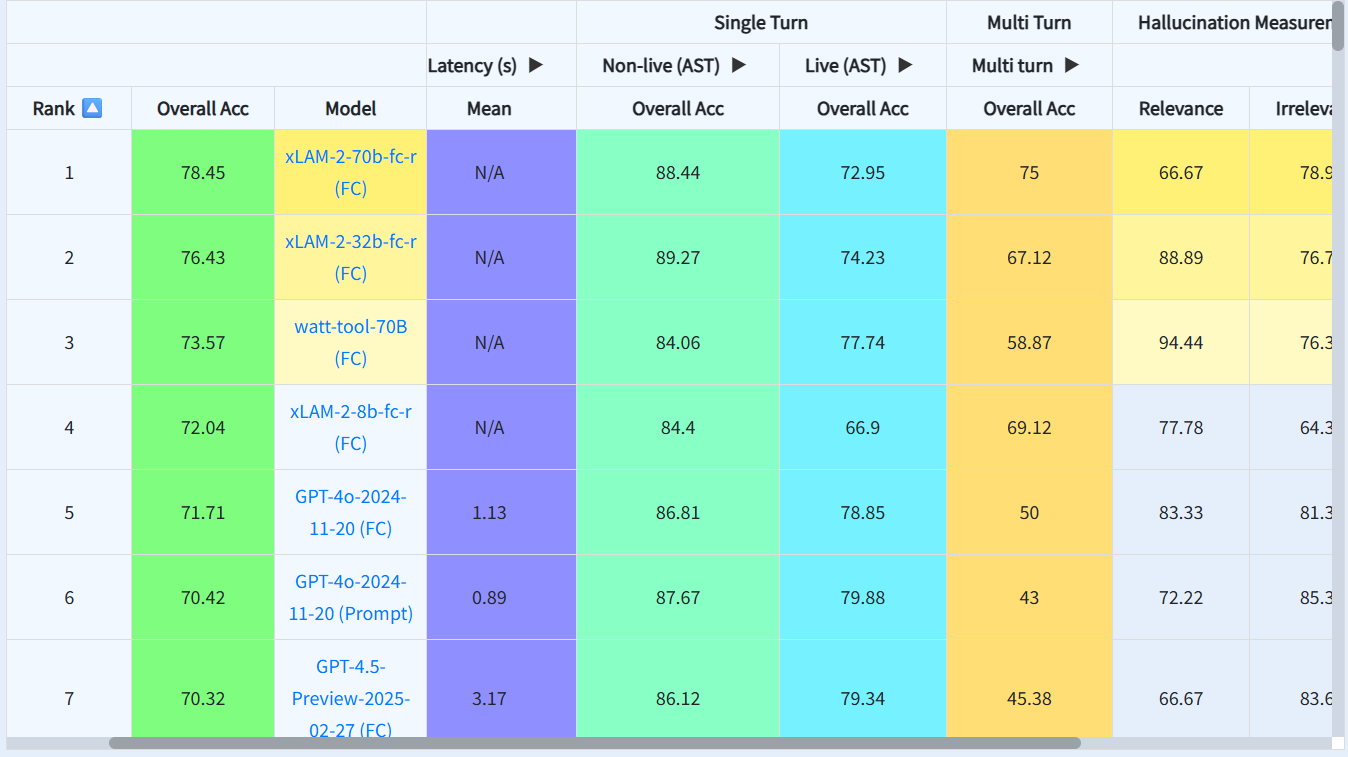

4. Berkeley Function-Calling Leaderboard

The Berkeley Function Calling Leaderboard (BFCL) is a benchmark that evaluates and compares the ability of large language models to perform function calling. This capability allows LLMs to interact with the external world, automate tasks, fetch real-time information, and integrate with APIs and services. Cloud providers like Amazon offer platforms for deploying and optimizing function-calling models, supporting integration with various APIs and services.

To provide a comprehensive and realistic evaluation of LLMs’ function-calling capabilities across a variety of scenarios. BFCL uses a novel Abstract Syntax Tree (AST) substring matching technique to assess the correctness of function calls generated by LLMs. The leaderboard also complements it with execution-based evaluation where feasible.

BFCL is a well-known benchmark because it includes a variety of tasks and real-world data. It focuses on important features like multi-turn interactions and relevance detection, which many other benchmarks often ignore.

Evaluation Benchmarks of BFCL

-

Single-turn focuses on scenarios involving a single function call.

-

Crowd-Sourced includes 2,251 real-world function-calling examples contributed by the community, showcasing practical use cases.

-

Multi-Turn evaluates the LLM's ability to maintain context and make dynamic decisions during conversations that involve multiple function calls.

-

It features eight API suites and 1,000 queries to test sustained context management and decision-making skills.

-

Agentic evaluates the LLM's ability to act as an AI agent.

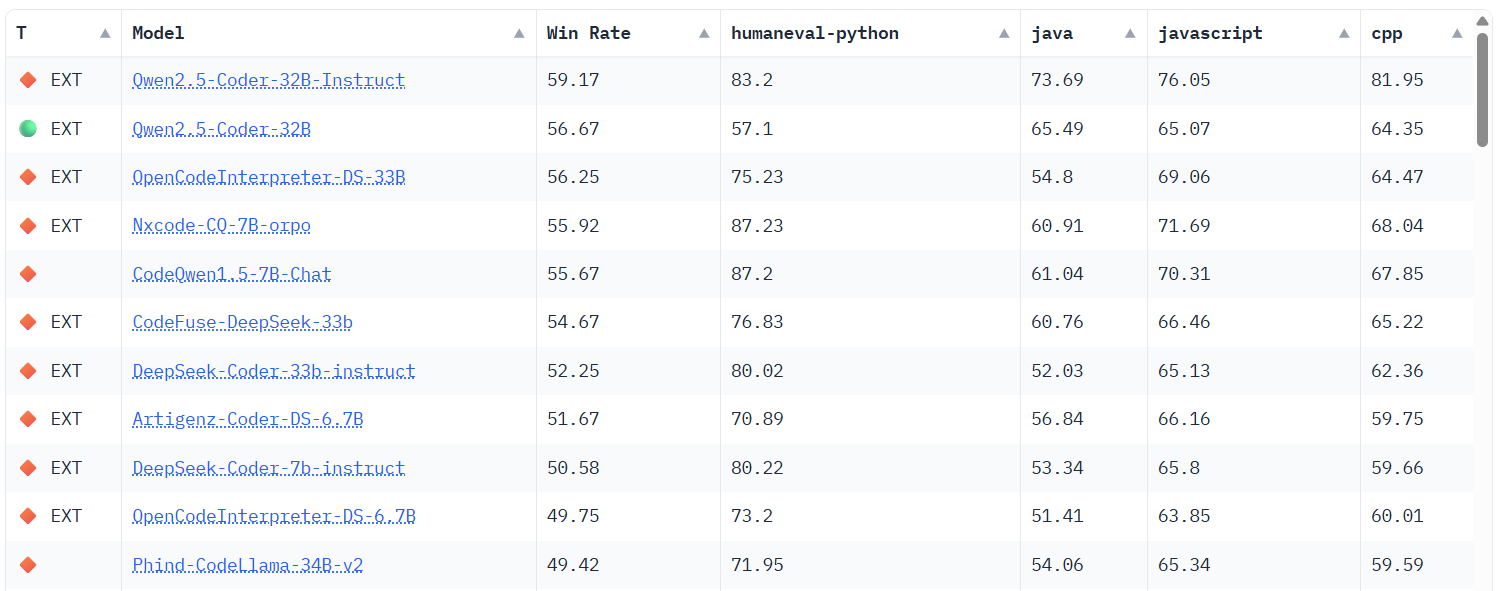

5. Big Code Models Leaderboard

BigCode Models Leaderboard evaluates LLM performance based on multilingual code generation models on the HumanEval benchmark and MultiPL-E. It is a translation of HumanEval into 18 programming languages. It also includes throughput measurements to compare inference speed.

Based on the recent data, below are the top LLMs on the Big Code Models Leaderboard:

-

Qwen 2.5 Coder 32B Instruct scores the highest with 59.17

-

DeepSeek Coder 7b scores 52.25.

-

CodeGemma 7B has a score of 33.83.

The leaderboard also features models and variants such as Gemma, Maverick, Scout, Oct, llama 3.1, and Turbo, each optimized for different aspects of code generation and performance. Major organizations like Meta and IBM contribute advanced code models to the leaderboard, highlighting their enterprise and research focus. Some models are currently in beta, allowing users to preview their capabilities before official release.

Evaluation Benchmarks of Big Code Models Leaderboard

-

Pass@k—It is used in benchmarks like HumanEval. This metric shows the likelihood that at least one of the top k solutions will pass all unit tests. This indicates that the solution works correctly.

-

Accuracy—Tools like SWE-bench are used to evaluate the correctness of the generated code or fixes.

-

Throughput—The Big Code Models Leaderboard measures the throughput of models on a given batch size.

6. Massive Text Embedding Benchmark (Mteb) Leaderboard

The Massive Text Embedding Benchmark (MTEB) leaderboard evaluates and compares the performance of text embedding models. It is hosted on Hugging Face and provides a standardized way to assess these models across a diverse range of tasks and languages.

The benchmark evaluates over 100 text and image embedding models on more than 56 datasets and 8 different NLP tasks. It is publicly accessible on GitHub and the Hugging Face platform, promoting transparency and collaboration.

Some of the top-performing models as of early 2025 are

-

NV-Embed-v2, developed by NVIDIA, is a generalist embedding model that fine-tunes the LLM namely, Mistral 7B. It scored 72.31 across 56 tasks.

-

Nomic-Embed-Text-v1.5 is a multimodal model developed by Nomic.

Evaluation Benchmarks of MTEB Leaderboard

MTEB evaluates models across eight distinct embedding tasks. Each one with private datasets and varying languages:

-

Bitext mining identifies matching sentences in two different languages.

-

Classification categorizes texts using embeddings and a logistic regression classifier.

-

Clustering groups similar texts together using mini-batch k-means scoring with V-measure.

-

Pair classification determines if two texts are similar.

-

Reranking is ranking a list of relevant and irrelevant texts based on a query.

-

Retrieval is about relevant documents for a given query.

-

Semantic Textual Similarity (STS) measures the similarity between sentence pairs.

-

Summarization evaluates machine-generated summaries against human-written ones using Spearman correlation based on cosine similarity.

7. Artificial Analysis LLM Performance Leaderboard

The Artificial Analysis LLM Performance Leaderboard provides a comprehensive evaluation of large language models (LLMs) and their API providers. It differentiates itself by offering a holistic assessment that considers model quality and performance metrics like speed, latency, and pricing, alongside context window size.

The image below compares and ranks the performance of over 100 AI models (LLMs) across key metrics.

Artificial Analysis LLM Performance Leaderboard Evaluation Benchmarks

-

Quality is evaluated using a simple index that combines scores from benchmarks like MMLU, MT-Bench, and HumanEval.

-

Pricing measures the cost of using the models, including per-token input and output pricing, as well as a blended price calculation assuming a 3:1 input-to-output ratio.

-

Speed via Throughput represents the rate at which the models generate tokens. It is measured in tokens per second (TPS).

-

Speed via Latency measures the time to first token (TTFT), or how long it takes for the model to generate the first token of a response.

-

Context window is the maximum number of tokens an LLM can process at once.

The Right LLM Isn’t Always the Top-Ranked One

Let us guide you to the model that matches your business needs, not just leaderboard scores.

Key Metrics LLM LeaderBoard Use to Evaluate LLMs

LLM leaderboards use various evaluation metrics to rank language models based on important criteria. Let’s look at the most common standards used for these rankings.

Accuracy and Performance

Models are evaluated on benchmarks such as classification, sentiment analysis, and summarization. These evaluate their accuracy in handling various language tasks.

Natural Language Understanding (NLU)

Datasets like GLUE and SuperGLUE assess an LLM’s understanding of human language through evaluation in areas like reading comprehension and sentence similarity.

Generative Capabilities

Text coherence, creativity, and relevance are evaluated through generative AI tasks. This involves creating well-structured and engaging responses that are contextually appropriate. Generative strength reflects the ability to produce compelling, human-like text beyond just factual accuracy.

Reasoning and Problem-Solving

LLM leaderboards evaluate models' problem-solving abilities through logical and mathematical reasoning benchmarks.

Multimodal Capabilities

The LLM leaderboards evaluate the growing capabilities of AI systems. So this has resulted in competitions involving models that can associate text with images, audio, and other forms of media.

Domain-Specific Performance

Industry-focused exams assess the performance of language models (LLMs) in specific fields, such as healthcare and finance. These tests check how effectively the model understands specialized language and uses reasoning relevant to those areas.

Latency and Speed

Accurate responses are vital for chatbots and virtual assistants. A model that provides precise answers but has slow response times can be frustrating. Low-latency responses are essential in high-demand situations where every millisecond counts.

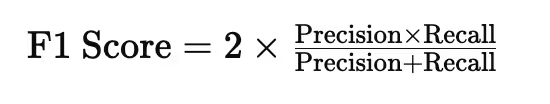

F1 Score

The F1-score is a metric used to evaluate the performance of language models in classification and information retrieval tasks. It combines two important measures: precision and recall.

Here,

-

Precision indicates the proportion of predicted positive results that are actually correct. It tells us the percentage of relevant items among those selected.

-

Recall shows the proportion of true positive results to all actual positive cases. It indicates the number of relevant items selected.

Bilingual Evaluation Understudy (BLEU) Score

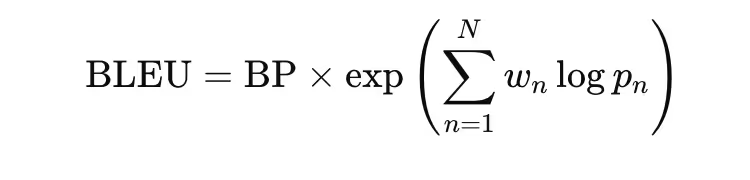

The BLEU score measures how good a machine-translated text is by comparing it to human translations. It checks how similar the machine-generated text is to reference texts by looking at the consistency of phrases and the overall structure.

The BLEU score is calculated using this formula:

-

The weight for the n-gram accuracy score is called w_n. We usually set the weights to 1 divided by n, where n is the number of different n-gram sizes we use.

-

p_n is the precision rating for a specific size of n-gram.

Put LLM Leaderboard Winners to Work

Our experts help you select, fine-tune, and integrate the best models for your needs.

Bottom Line

We hope this blog has provided you with all the required information about the top LLM leaderboards. While these LLM leaderboards are invaluable for measuring the success and choosing the right large language models, it is just the top page view that requires addressing.

There is a huge part waiting on the other side that many businesses may struggle with. And that is finding the right expertise to implement it. That is why we are here to make it easier for you.

We have proven years of experience in developing custom solutions. Our expertise allows us to create the perfect blend of skills needed for your needs. We are here to deliver the best advanced LLM development services and advanced solutions that are right for your business. Get in touch today.

%201-1.webp?width=148&height=74&name=our%20work%20(2)%201-1.webp)

.png?width=344&height=101&name=Mask%20group%20(5).png)

.png?width=352&name=Role%20of%20AI%20in%20Software%20Development%20(4).png)